False Positive or Noise? Smart security teams still get this wrong

Before you report a security finding as a "false positive", make sure you distinguish between FPs and noise. The difference matters, and more people get it wrong than you'd expect

After 20 years in infosec, I've seen even excellent security professionals make the same fundamental mistake over and over again—misreporting findings as false positives. Now, I've encountered my fair share of errors in reports from security tools, including some pretty egregious false positives. But I've also seen a lot of very skilled, very smart people fundamentally misunderstand what a false positive actually is, and end up reporting or complaining of "false positives" (FPs) inappropriately. Actually, this is true for false negatives as well, but that's another article.

False Positive (FP) means a factual assertion that isn't true. It does not mean "something that's annoying or useless or not important" – that's noise. It does not mean "something that ends up posing too little risk for us to act on", that's just an acceptable risk.

Consider a straightforward example: you run an SCA tool on your Python repository, and it says "you have foobar@1.2.0 and it has CVE-20xx-1234".

- You don't actually depend on foobar@1.2.0? False Positive

- foobar@1.2.0 doesn't actually have that CVE associated with it? False Positive

- The way you use foobar@1.2.0 means it's impossible to exercise the CVE? Not an FP! – it's noise (information that's not useful to you), but it's a True Positive for the test.

Now, if the tool is saying "you are vulnerable to CVE-20xx-124 because...", and you are not in fact vulnerable, that's a False Positive.

The key to understanding the difference is understanding what our tools are actually testing, and therefore what claim they're actually making when they generate a finding.

It's about the scope of the test

Whether something is an FP has everything to do with what question does the test answer?

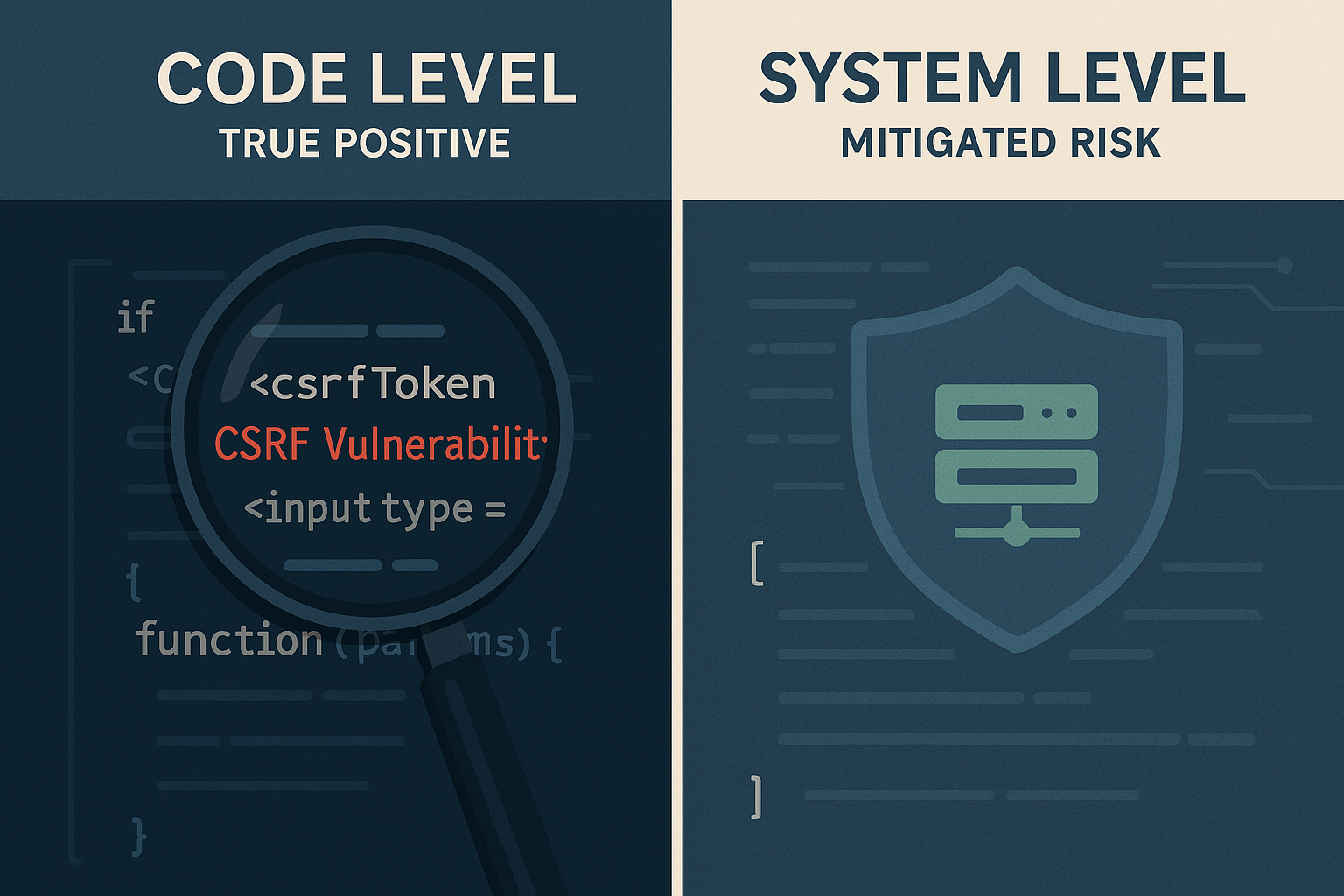

For example, SAST scans attempt to answer "does this code, as written, have a security weakness?" If the test says it does, and it does – that's a true positive! And it remains a true positive even if you find the risk acceptable, have compensating controls that drive it to zero, or are using that code in a way that the weakness isn't exploitable.

If a SAST scan says "you have a CSRF weakness", but your code actually sets and checks a properly implemented anti-CSRF token, then that's an FP—the scan is making a factually false claim about a weakness in your code. But what if your production deployment is adding these tokens via an application server or proxy? The finding is still a true positive; you just have a compensating control that means you don't need to act on it. The finding can be considered noise—not relevant to your environment or security program—but not FP.

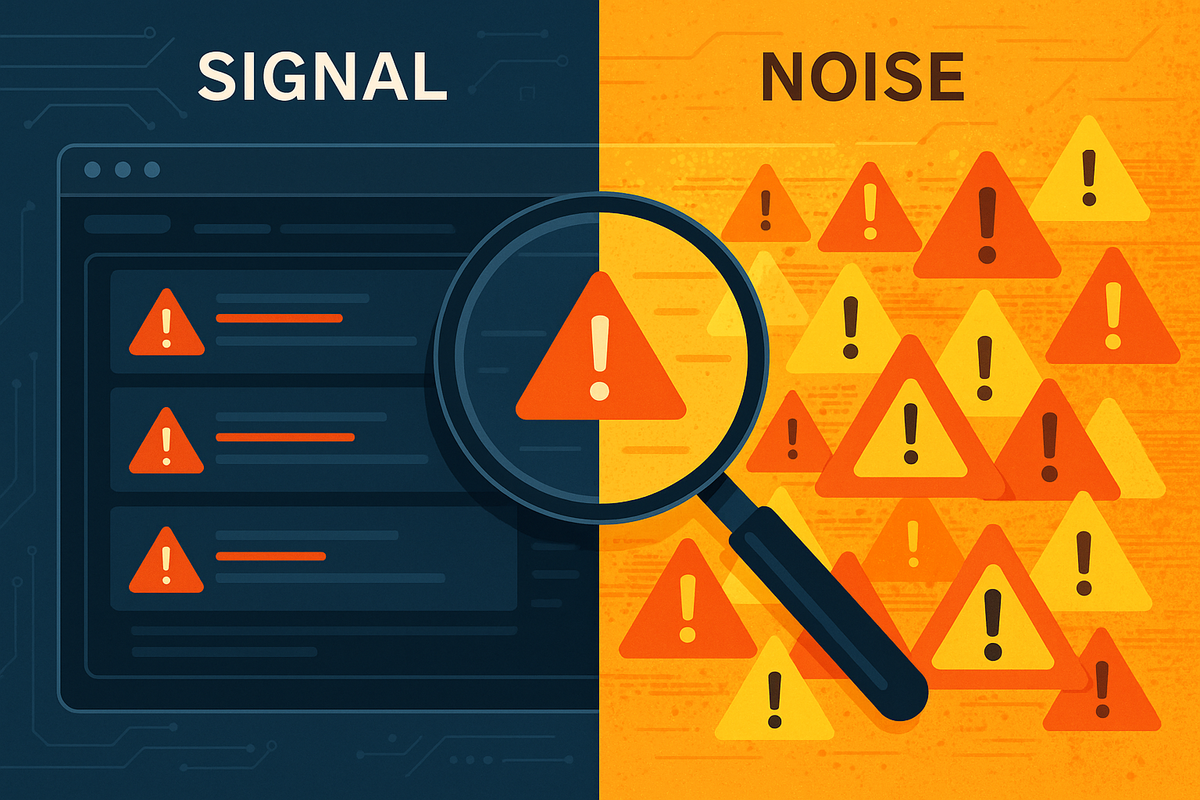

It's important not to lose track of the purpose of reviewing findings in the first place: you're trying to eliminate noise. Actual FPs are always noise; because they're false assertions, they waste your time. But once you've eliminated FPs from your result set, what remains must still be sorted into signal and noise. And many of the criteria you will use for that will be subjective, or at least context-dependent.

Noise reduction is on you

It's great when vendors put good work into helping their products be less noisy. When, for example, an SCA tool examines whether you're using a vulnerable dependency in a safe way and categorizes the safe usage instances as noise.

But there is no vendor that can completely solve this problem for you. What's noise depends on applying your deep knowledge and expertise to your organization's threat model and risk tolerance. Individual tools with noise-reduction features are helping you automate some decision-making here; as are things like ASPMs that have high-quality correlation systems (which, sadly, a lot of ASPMs lack). But ultimately, it's up to you to build systems and processes that effectively evaluate reported flaws to find which ones need to be acted on and which ones are noise for your organization.

And yes, that system should also have a pathway for you to identify FPs, because while not all noise is an FP, all FPs are noise.

So the next time you look at a finding and think "argh, this is another FP!", take a second to check yourself—is it actually a false positive, or is it a true positive that's just noise for your organization? Don't label valid findings as "FP" when they're just noise for you.